redis集群的增加节点(扩容),以Centos7 Redis6.0.6版本集群搭建文章的redis集群搭建基础上增加2个节点

首先按照上篇文章的redis的配置步骤,启动2个同样配置的redis节点

分别是7007和7008,并设置7007为master节点,7008位slave节点

系统centos7

和之前节点机器互通,新节点中不要有数据

192.168.10.4 代表第四台机器ip(新的节点服务器)

确认7007、7008节点的redis服务启动

ps -ef |grep redis

root 18128 1 0 14:44 ? 00:00:00 /usr/local/redis/bin/redis-server 192.168.10.4:7007 [cluster]

root 18134 1 0 14:44 ? 00:00:00 /usr/local/redis/bin/redis-server 192.168.10.4:7008 [cluster]

root 18185 18061 0 14:46 pts/0 00:00:00 grep --color=auto redis

在原集群的其中一台机器上执行添加节点操作

# 命令参数 :redis-cli --cluster add-node 新节点ip:端口 集群节点ip:端口

# 添加7007节点

[root@localhost ~]# redis-cli --cluster add-node 192.168.10.4:7007 192.168.10.1:7001

>>> Adding node 192.168.10.4:7007 to cluster 192.168.10.1:7001

>>> Performing Cluster Check (using node 192.168.10.1:7001)

M: f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 192.168.10.1:7001

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 4cc528ff35eadeb795af874fefc0009e555774d6 192.168.10.3:7006

slots: (0 slots) slave

replicates db2ae554b712331f12e728fc7d246c89fa79a78d

S: c528e42056573406479b0af5f5b089ec990836a5 192.168.10.1:7002

slots: (0 slots) slave

replicates 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

M: 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 192.168.10.3:7005

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: dbecff7305a5ad51f42052548253f73419b714dc 192.168.10.2:7004

slots: (0 slots) slave

replicates f202aff7ae33e68a6d331b34b5c32ca71d91e8f3

M: db2ae554b712331f12e728fc7d246c89fa79a78d 192.168.10.2:7003

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.10.4:7007 to make it join the cluster.

[OK] New node added correctly.

# 添加7008节点

[root@localhost ~]# redis-cli --cluster add-node 192.168.10.4:7008 192.168.10.1:7001

>>> Adding node 192.168.10.4:7008 to cluster 192.168.10.1:7001

>>> Performing Cluster Check (using node 192.168.10.1:7001)

M: f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 192.168.10.1:7001

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 4cc528ff35eadeb795af874fefc0009e555774d6 192.168.10.3:7006

slots: (0 slots) slave

replicates db2ae554b712331f12e728fc7d246c89fa79a78d

S: c528e42056573406479b0af5f5b089ec990836a5 192.168.10.1:7002

slots: (0 slots) slave

replicates 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

M: 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 192.168.10.3:7005

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: dbecff7305a5ad51f42052548253f73419b714dc 192.168.10.2:7004

slots: (0 slots) slave

replicates f202aff7ae33e68a6d331b34b5c32ca71d91e8f3

M: 3da86e6d25d38d8fb9af80e87bfd5aeff867e309 192.168.10.4:7007

slots: (0 slots) master

M: db2ae554b712331f12e728fc7d246c89fa79a78d 192.168.10.2:7003

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.10.4:7008 to make it join the cluster.

[OK] New node added correctly.

# 确认集群节点信息,可以看到新的节点已加入到集群中,默认为master角色

127.0.0.1:7001> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:8

cluster_size:3

cluster_current_epoch:7

cluster_my_epoch:1

cluster_stats_messages_ping_sent:3942

cluster_stats_messages_pong_sent:3899

cluster_stats_messages_sent:7841

cluster_stats_messages_ping_received:3892

cluster_stats_messages_pong_received:3942

cluster_stats_messages_meet_received:7

cluster_stats_messages_received:7841

127.0.0.1:7001> cluster nodes

4cc528ff35eadeb795af874fefc0009e555774d6 192.168.10.3:7006@17006 slave db2ae554b712331f12e728fc7d246c89fa79a78d 0 1618556195000 3 connected

f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 192.168.10.1:7001@17001 myself,master - 0 1618556195000 1 connected 0-5460

c528e42056573406479b0af5f5b089ec990836a5 192.168.10.1:7002@17002 slave 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 0 1618556194000 5 connected

17c719e9919823b4e2da15d1a64412de63a4eb0c 192.168.10.4:7008@17008 master - 0 1618556194564 7 connected

2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 192.168.10.3:7005@17005 master - 0 1618556195669 5 connected 10923-16383

dbecff7305a5ad51f42052548253f73419b714dc 192.168.10.2:7004@17004 slave f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 0 1618556195000 1 connected

3da86e6d25d38d8fb9af80e87bfd5aeff867e309 192.168.10.4:7007@17007 master - 0 1618556195568 0 connected

db2ae554b712331f12e728fc7d246c89fa79a78d 192.168.10.2:7003@17003 master - 0 1618556194000 3 connected 5461-10922由于要设置7007为master节点,就要为它分配槽位,新加入的节点是空的没有槽位的

# 登录集群其中一个master主节点

[root@localhost ~]# redis-cli --cluster reshard 192.168.10.1:7001

>>> Performing Cluster Check (using node 192.168.10.1:7001)

M: f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 192.168.10.1:7001

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 4cc528ff35eadeb795af874fefc0009e555774d6 192.168.10.3:7006

slots: (0 slots) slave

replicates db2ae554b712331f12e728fc7d246c89fa79a78d

S: c528e42056573406479b0af5f5b089ec990836a5 192.168.10.1:7002

slots: (0 slots) slave

replicates 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

M: 17c719e9919823b4e2da15d1a64412de63a4eb0c 192.168.10.4:7008

slots: (0 slots) master

M: 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 192.168.10.3:7005

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: dbecff7305a5ad51f42052548253f73419b714dc 192.168.10.2:7004

slots: (0 slots) slave

replicates f202aff7ae33e68a6d331b34b5c32ca71d91e8f3

M: 3da86e6d25d38d8fb9af80e87bfd5aeff867e309 192.168.10.4:7007

slots: (0 slots) master

M: db2ae554b712331f12e728fc7d246c89fa79a78d 192.168.10.2:7003

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# 这里是指给他分配多少槽位

How many slots do you want to move (from 1 to 16384)? 4096

# 这里是指分配给谁,填写集群node的ID,给7007分配,就写7007节点的ID

What is the receiving node ID? 3da86e6d25d38d8fb9af80e87bfd5aeff867e309

# 这里是指从哪些节点分配,all表示从所有节点

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

······

省略输出内容

······

Moving slot 12278 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12279 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12280 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12281 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12282 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12283 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12284 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12285 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12286 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

Moving slot 12287 from 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b

# 上面是已经重新分配好的槽位,是否要执行上面的分配计划,这里输yes

Do you want to proceed with the proposed reshard plan (yes/no)? yes

······

省略输出内容

······

Moving slot 12281 from 192.168.10.3:7005 to 192.168.10.4:7007:

Moving slot 12282 from 192.168.10.3:7005 to 192.168.10.4:7007:

Moving slot 12283 from 192.168.10.3:7005 to 192.168.10.4:7007:

Moving slot 12284 from 192.168.10.3:7005 to 192.168.10.4:7007:

Moving slot 12285 from 192.168.10.3:7005 to 192.168.10.4:7007:

Moving slot 12286 from 192.168.10.3:7005 to 192.168.10.4:7007:

Moving slot 12287 from 192.168.10.3:7005 to 192.168.10.4:7007:

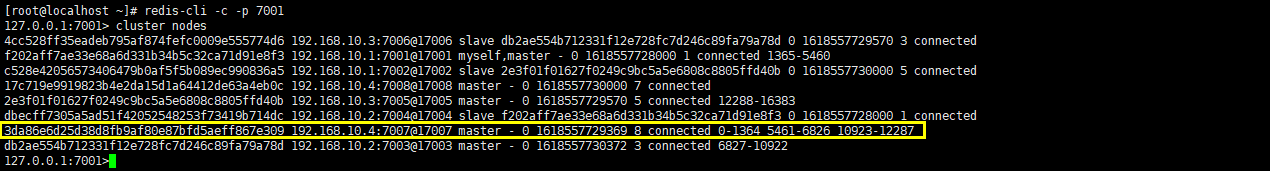

[root@localhost ~]#查看集群节点信息,确认刚才的槽位分配结果和7007节点角色

127.0.0.1:7001> cluster nodes

4cc528ff35eadeb795af874fefc0009e555774d6 192.168.10.3:7006@17006 slave db2ae554b712331f12e728fc7d246c89fa79a78d 0 1618557627073 3 connected

f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 192.168.10.1:7001@17001 myself,master - 0 1618557626000 1 connected 1365-5460

c528e42056573406479b0af5f5b089ec990836a5 192.168.10.1:7002@17002 slave 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 0 1618557626000 5 connected

17c719e9919823b4e2da15d1a64412de63a4eb0c 192.168.10.4:7008@17008 master - 0 1618557627073 7 connected

2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 192.168.10.3:7005@17005 master - 0 1618557626572 5 connected 12288-16383

dbecff7305a5ad51f42052548253f73419b714dc 192.168.10.2:7004@17004 slave f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 0 1618557626000 1 connected

3da86e6d25d38d8fb9af80e87bfd5aeff867e309 192.168.10.4:7007@17007 master - 0 1618557626000 8 connected 0-1364 5461-6826 10923-12287

db2ae554b712331f12e728fc7d246c89fa79a78d 192.168.10.2:7003@17003 master - 0 1618557626071 3 connected 6827-10922设置7008节点为slave从节点

# 登录7008节点,执行从节点的主节点id (这里id写7007主节点的id)

[root@localhost ~]# redis-cli -c -h 192.168.10.4 -p 7008

192.168.10.4:7008> cluster replicate 3da86e6d25d38d8fb9af80e87bfd5aeff867e309

OK

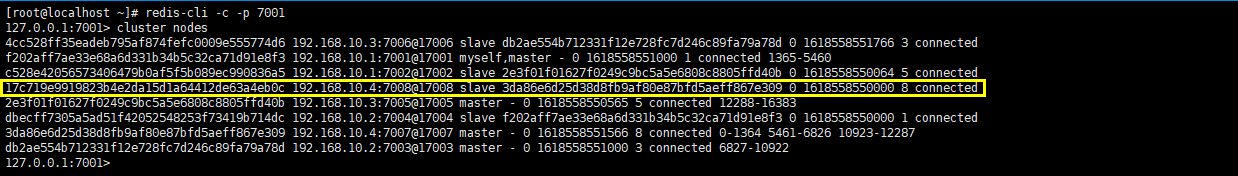

192.168.10.4:7008>查看集群节点信息,确认7008 slave节点是否设置成功

[root@localhost ~]# redis-cli -c -p 7001

127.0.0.1:7001> cluster nodes

4cc528ff35eadeb795af874fefc0009e555774d6 192.168.10.3:7006@17006 slave db2ae554b712331f12e728fc7d246c89fa79a78d 0 1618558551766 3 connected

f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 192.168.10.1:7001@17001 myself,master - 0 1618558551000 1 connected 1365-5460

c528e42056573406479b0af5f5b089ec990836a5 192.168.10.1:7002@17002 slave 2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 0 1618558550064 5 connected

17c719e9919823b4e2da15d1a64412de63a4eb0c 192.168.10.4:7008@17008 slave 3da86e6d25d38d8fb9af80e87bfd5aeff867e309 0 1618558550000 8 connected

2e3f01f01627f0249c9bc5a5e6808c8805ffd40b 192.168.10.3:7005@17005 master - 0 1618558550565 5 connected 12288-16383

dbecff7305a5ad51f42052548253f73419b714dc 192.168.10.2:7004@17004 slave f202aff7ae33e68a6d331b34b5c32ca71d91e8f3 0 1618558550000 1 connected

3da86e6d25d38d8fb9af80e87bfd5aeff867e309 192.168.10.4:7007@17007 master - 0 1618558551566 8 connected 0-1364 5461-6826 10923-12287

db2ae554b712331f12e728fc7d246c89fa79a78d 192.168.10.2:7003@17003 master - 0 1618558551000 3 connected 6827-10922

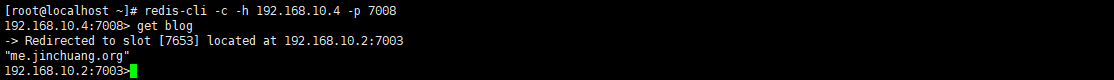

127.0.0.1:7001> 取key测试

本文最后记录时间 2024-03-30

文章链接地址:https://me.jinchuang.org/archives/1106.html

本站文章除注明[转载|引用|来源],均为本站原创内容,转载前请注明出处

文章链接地址:https://me.jinchuang.org/archives/1106.html

本站文章除注明[转载|引用|来源],均为本站原创内容,转载前请注明出处