Logstash作为Elasicsearch常用的实时数据采集引擎,可以采集来自不同数据源的数据,并对数据进行处理后输出到多种输出源

接上篇elasticsearch5.6 安装

下载、安装

[root@localhost ~]# cd /source

[root@localhost source]# wget https://artifacts.elastic.co/downloads/logstash/logstash-5.6.0.tar.gz

[root@localhost source]# tar xf logstash-5.6.0.tar.gz

[root@localhost source]# mv logstash-5.6.0 /elk/logstash

[root@localhost source]#cd /elk/logstash/config

#创建配置文件 这里以读取nginx日志为例

[root@localhost config]# vim nginx-access.conf

input {

file {

path => "/usr/local/nginx/logs/cloud.access.log"

start_position => beginning

ignore_older => 0

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}"}

remove_field => [ "message","path","host" ]

}

}

output {

elasticsearch {

hosts => "127.0.0.1:9200"

index => "nginx-%{+YYYY.MM.dd}"

}

}

#启动logstash

[root@localhost config]# /elk/logstash/bin/logstash -f /elk/logstash/config/nginx-access.conf &

[1] 30030

2018-11-22 13:18:40,631 main ERROR Unable to locate appender "${sys:ls.log.format}_console" for logger config "root"

2018-11-22 13:18:40,632 main ERROR Unable to locate appender "${sys:ls.log.format}_rolling" for logger config "root"

2018-11-22 13:18:40,632 main ERROR Unable to locate appender "${sys:ls.log.format}_rolling_slowlog" for logger config "slowlog"

2018-11-22 13:18:40,633 main ERROR Unable to locate appender "${sys:ls.log.format}_console_slowlog" for logger config "slowlog"

Sending Logstash's logs to /elk/logstash/logs which is now configured via log4j2.properties

[2018-11-22T13:18:41,911][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/elk/logstash/modules/fb_apache/configuration"}

[2018-11-22T13:18:41,915][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/elk/logstash/modules/netflow/configuration"}

[2018-11-22T13:18:41,919][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/elk/logstash/data/queue"}

[2018-11-22T13:18:41,920][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/elk/logstash/data/dead_letter_queue"}

[2018-11-22T13:18:41,965][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"0e15de90-b141-4923-a458-360603465bae", :path=>"/elk/logstash/data/uuid"}

[2018-11-22T13:18:42,645][INFO ][logstash.outputs.elasticsearch] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://127.0.0.1:9200/]}}

[2018-11-22T13:18:42,647][INFO ][logstash.outputs.elasticsearch] Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://127.0.0.1:9200/, :path=>"/"}

[2018-11-22T13:18:42,753][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://127.0.0.1:9200/"}

[2018-11-22T13:18:42,755][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil}

[2018-11-22T13:18:42,799][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

[2018-11-22T13:18:42,807][INFO ][logstash.outputs.elasticsearch] Installing elasticsearch template to _template/logstash

[2018-11-22T13:18:43,117][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//127.0.0.1:9200"]}

[2018-11-22T13:18:43,228][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

[2018-11-22T13:18:43,455][INFO ][logstash.pipeline ] Pipeline main started

[2018-11-22T13:18:43,501][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

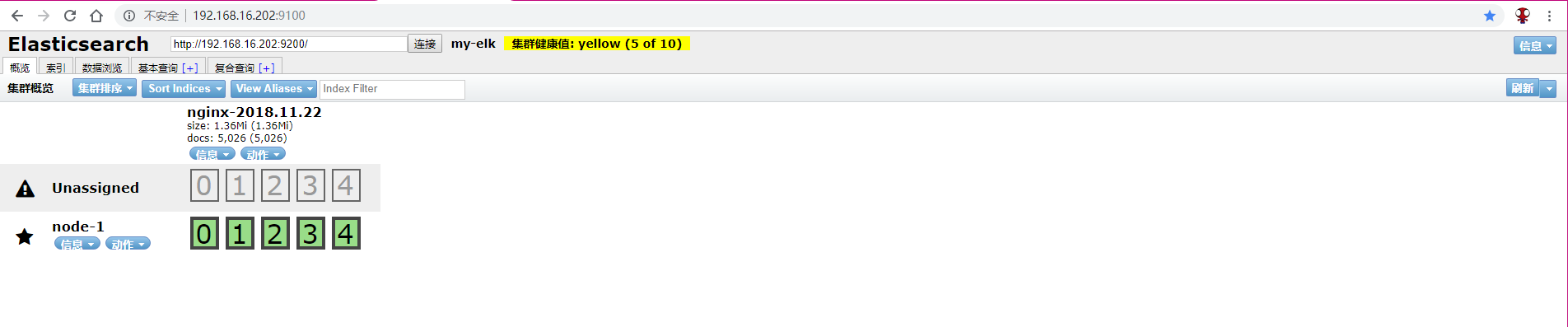

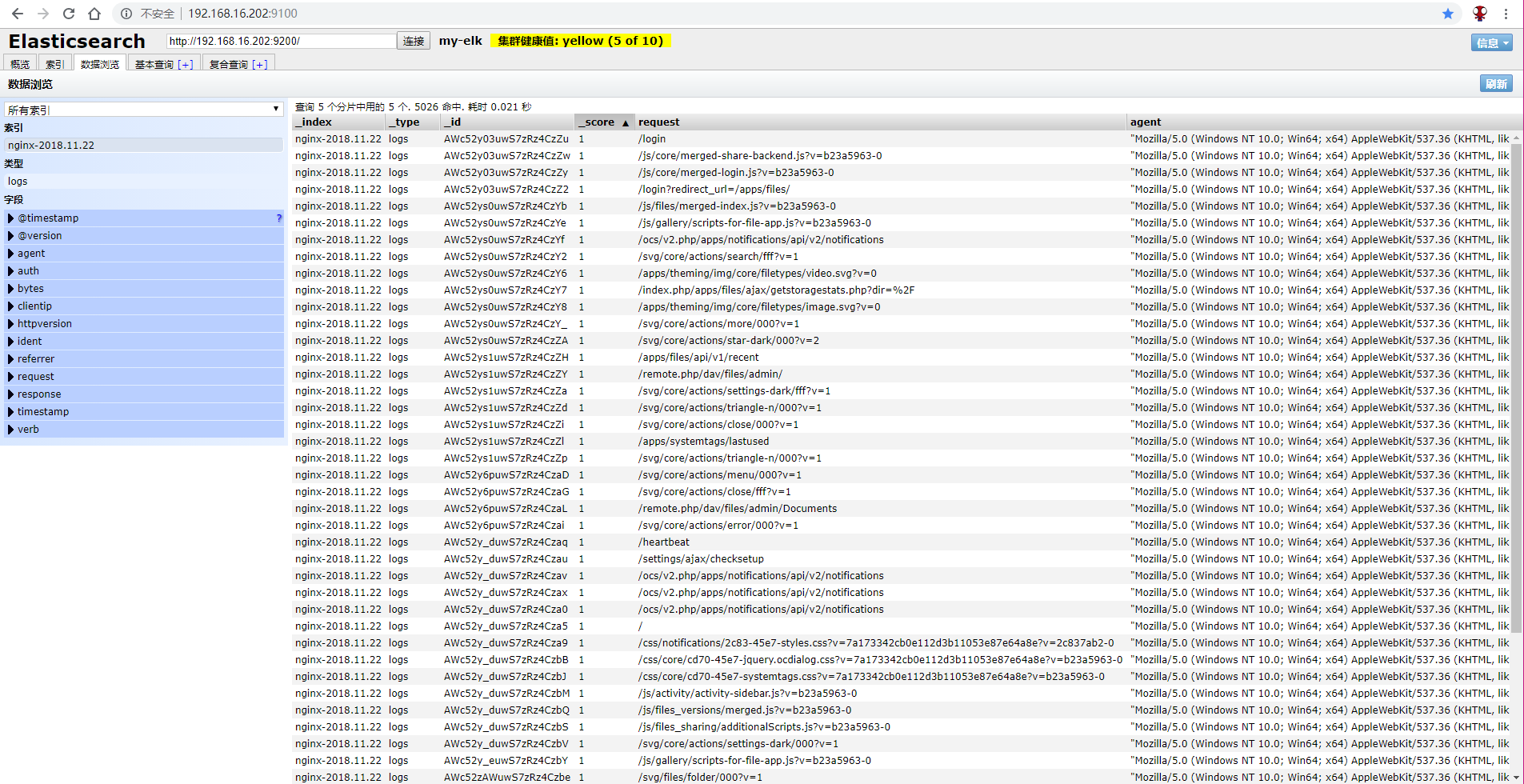

访问elasticsearch-head 看看是否有日志输入

关于集群健康值 yellow 这个警告

由于我们是单节点部署elasticsearch,而默认的分片副本数目配置为1,而相同的分片不能在一个节点上,

所以就存在副本分片指定不明确的问题,所以显示为yellow,我们可以通过在elasticsearch集群上添加一个节点来解决问题,

如果你不想这么做,你可以删除那些指定不明确的副本分片(当然这不是一个好办法)但是作为测试和解决办法还是可以尝试的

作者原文:https://blog.csdn.net/gamer_gyt/article/details/53230165

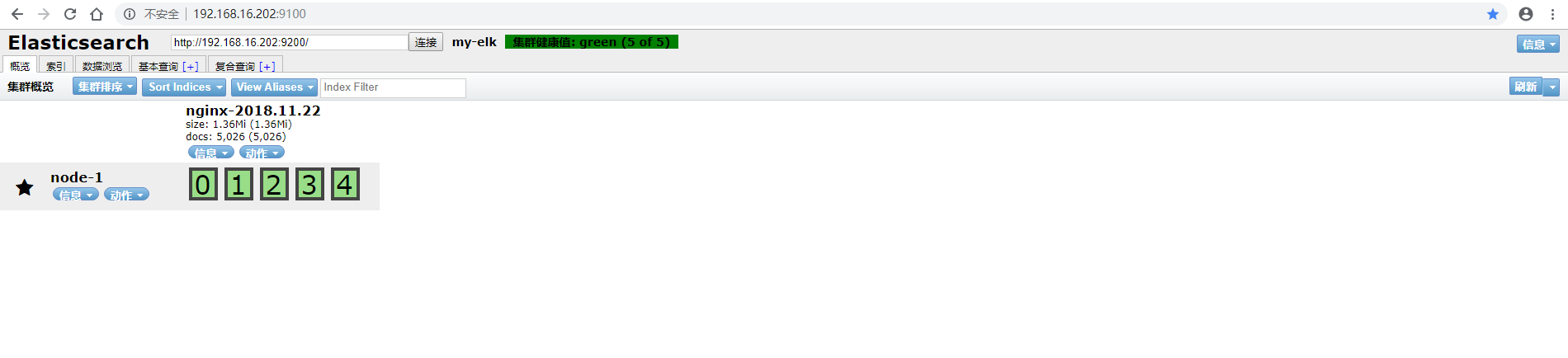

#删除副本分片 设置为0

[root@localhost ~]# curl -XPUT "http://localhost:9200/_settings" -d' { "number_of_replicas" : 0 } '

{"acknowledged":true}[root@localhost ~]#

再次查看

本文最后记录时间 2024-03-31

文章链接地址:https://me.jinchuang.org/archives/311.html

本站文章除注明[转载|引用|来源],均为本站原创内容,转载前请注明出处

文章链接地址:https://me.jinchuang.org/archives/311.html

本站文章除注明[转载|引用|来源],均为本站原创内容,转载前请注明出处